Teaching drones how to learn on the fly

Alexandra George

Oct 8, 2018

If you’ve ever been to the zoo with a four-year-old, you know how they curiously look around to understand what all of the new animals are: observing how a tiger looks like a cat or moving around to discover where a bear might be blending into its surroundings. Electrical and computer engineering (ECE) and CMU Silicon Valley Professor Bob Iannucci and ECE Ph.D. candidate Ervin Teng are asking how drones can mimic this behavior by becoming curious themselves. To do so, they are using machine learning and a simulation training tool to teach drones how to learn in real-time in what they call “autonomous curiosity.”

Drones provide us with the ability to reach difficult points of view, but it usually takes a human to safely guide the flight and make sense of the images it captures. Deep learning and machine learning have the capability to interpret and analyze image and video. The researchers have combined these two technologies to get the vantage points of a drone without having a human analyst tracking and studying each image. It opens the possibility of assisting humans in applications like emergency response, where the subject might be unknown or difficult to identify.

But a major roadblock still exists: training the algorithms on board the drone to recognize specific subjects when they are found, as the mission unfolds. When you don’t know what the object is going to be, you can’t pre-train it. The mobility of drones themselves offers an advantage—when pointed at a subject of interest, a drone has the potential to fly around and over it. In the process, it creates a rich stream of video images that can be used to train a neural network.

We’re trying to give drones the ability to say, ‘Oh I know how to explore that, because I’ve been trained in how to explore things, generally.’ When it’s curious it can build its own model of whatever it is that we’re trying to track,

Bob Iannucci , Professor, Carnegie Mellon University

“We’re trying to give drones the ability to say, ‘Oh I know how to explore that, because I’ve been trained in how to explore things, generally.’ When it’s curious it can build its own model of whatever it is that we’re trying to track,” says Iannucci.

In their previous research, the team found a way to track an object in a video image that was pointed out by a human operator, and segment it from its background. Then, they trained the drone to guide its own movement to gather a richer set of images of the object of interest. To do this, the team created

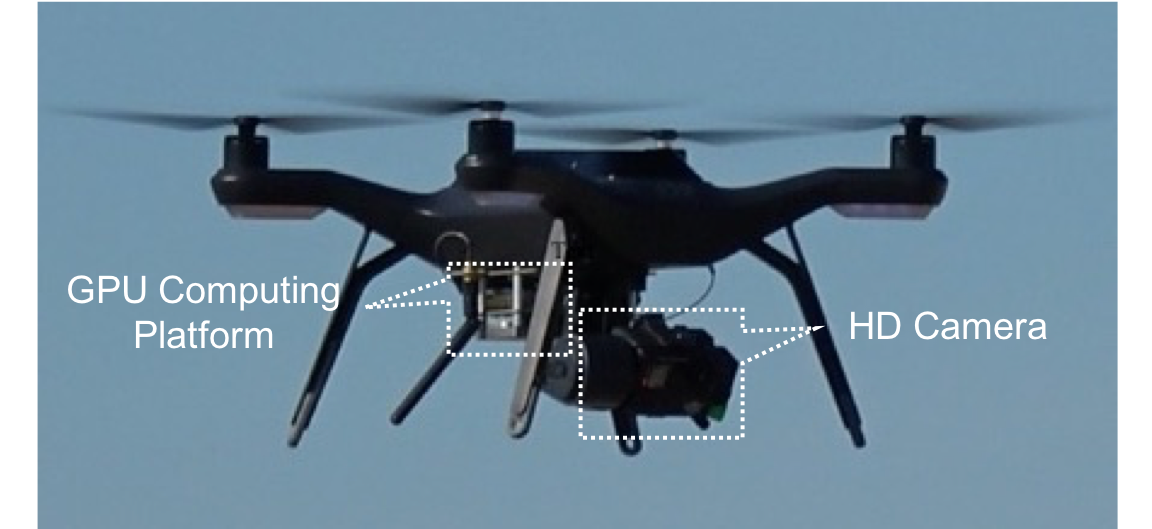

Source: CMU Silicon Valley Drone

VIPER provides a virtual training environment that supports research on drone behavior through photo-realistic imagery, realistic physics, and faster-than-real-time flight. Instead of a thousand test flights, VIPER can stage a thousand different virtual scenes to teach the virtual drone’s neural-network-enhanced flight controller to be curious. Then, that trained curiosity can be used without modification to make a real-world drone curious.

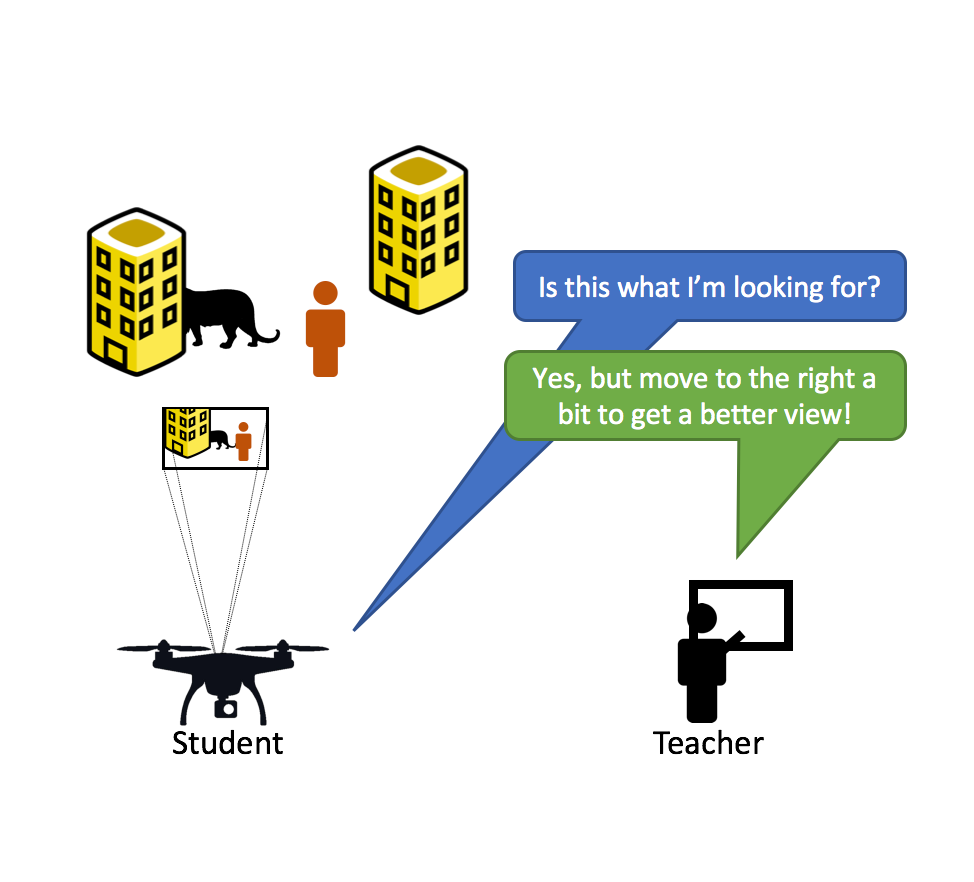

Autonomous curiosity involves first training a “teacher” neural network, or curiosity agent, in VIPER. Then, once in the field, the “teacher” network guides the flight controls of the drone so that a “student” neural network can focus on learning the specific object of interest. The teacher has already learned how to move around to avoid objects in the scene that block the object of interest. It also knows how to find scenes where the “student” is having difficulty. In the zoo example, the parent would be like the “teacher” neural net, the child would be the “student,” and the tiger would be the object of interest.

Source: Carnegie Mellon University

Teacher Student Diagram

With this knowledge, the drone can fly to positions that maximize its ability to learn how to recognize an object. This online type of training avoids the necessity of training the student neural network offline to recognize specific subjects and instead enables dynamic learning—on-the-fly, so to speak.

“Like a good student, you want it to only ask the teacher for further instruction when it’s unsure of itself or confused,” says Teng, the project’s team lead. “This allows us to build a smaller and more effective training set, rather than having it just passively sit there and have the human annotate.”

Iannucci and Teng have tested the drones at Camp Roberts as part of the Joint Interagency Field Experimentation program offered through the Naval Postgraduate School. During the final test flight in early August, they worked on expanding the drone’s range of motion—giving the drones time to gain practical experience in the field. The drone exhibited positive behavior: after it was told the building it was asked to identify, it moved around to get different angles, differentiated the subject from other buildings, and spent more time on angles where the subject was partially obstructed or confused for other buildings. With these promising results, autonomous curiosity could have applications from emergency response to smart cities.

“The research question is, ‘Will curiosity learned in this sterile simulation environment generalize to the real world? Or is it just that it’s so sterile that when you learn how to be curious in a virtual setting, it might not work in the real world?’” says Iannucci. “It turns out that it does, and that’s the breakthrough.”